A FACT360 BLOG SERIES – PART 2

Professor Mark Bishop,

FACT360, Chief Scientific Adviser

[email protected]

In his second post (read Part 1 here) in ‘The Science of AI’ series, FACT360’s Chief Scientific Adviser, Professor Mark Bishop looks at ‘computation’ and how its limitations influence modern AI solutions…

As a modest, geeky, young student of Computer Science, I engaged with my studies convinced that, given enough time, I could program anything that I turned my hand to. In this light, I well remember the profound intellectual shock, felt in my third year as an undergraduate, on discovering the existence of the incomputable; a strange new world of problems which, no matter how fast or advanced the computer, machines cannot solve – neither now, nor at any point in time in the future. And these ‘limitations of computation’ are important signposts in our blogged journey because, by shining the ‘black-light of the incomputable’ upon the deepest mysteries of Artificial Intelligence, we reveal deep insights into Cognitive Science – the ideology that the mind/psychology is fully explainable in purely computational terms. But first, to begin to understand why there exist problems that are impossible to solve on your PC, we need to have a clear understanding of just ‘what’ a computer is and ‘how’ computation works.

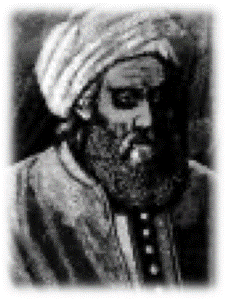

Opposite is an image of the scholar Abū Abdallāh Muḥammad ibn Mūsā  al-Khwārizmī, a ninth century Persian mathematician based at the ‘House of Wisdom’ in Bagdad. Amongst other things, Al-Khwarizmi outlined a finite series of steps, or transformations (‘Al-jabr’; algebra), which, when carefully followed, produced the (real) roots of quadratic equations. In so doing Al-Khwarizmi (or ‘Algorismus’ as he was known by Latin speakers) had not only spoken of, but also given his own name to, a new tool in the art of computation; the ‘algorithm’. Indeed, historically, the term computer did not refer to a machine but to a human; a mathematician who ‘calculated by rote’ in accordance with an ‘effective method’ – a mathematical process being ‘effective’ if and only if it demands no ‘insight’ or ‘ingenuity’ from the [human] computer to follow and produces a result in a finite number of steps.

al-Khwārizmī, a ninth century Persian mathematician based at the ‘House of Wisdom’ in Bagdad. Amongst other things, Al-Khwarizmi outlined a finite series of steps, or transformations (‘Al-jabr’; algebra), which, when carefully followed, produced the (real) roots of quadratic equations. In so doing Al-Khwarizmi (or ‘Algorismus’ as he was known by Latin speakers) had not only spoken of, but also given his own name to, a new tool in the art of computation; the ‘algorithm’. Indeed, historically, the term computer did not refer to a machine but to a human; a mathematician who ‘calculated by rote’ in accordance with an ‘effective method’ – a mathematical process being ‘effective’ if and only if it demands no ‘insight’ or ‘ingenuity’ from the [human] computer to follow and produces a result in a finite number of steps.

Unfortunately, because neither ‘insight’ or ‘ingenuity’ are strictly defined, the notion of ‘effective method’ (qua ‘mechanical process’) is not rigorously pinned down; a puzzle that, in 1936, famously inspired a young mathematician named Alan Mathison Turing to reflect on the subject. As Robin Gandy, a PhD student supervised by Turing at Cambridge, latterly recalled:

“To the question, ‘What is a mechanical process?’ Turing returned a characteristically cryptic answer, ‘Something that can be done by a machine’ and subsequently embarked on the highly congenial task of analyzing the general notion of a computing machine.”

(Gandy, 1974)

A modern definition of the algorithm – intimately intertwined with that of an ‘effective method’ – states that a procedure to achieve a specified result is an algorithm if and only if each step is ‘blind’ (i.e. it needs no additional ‘human insight’ to evaluate); steps ‘follow on blindly’, (no human insight is needed to determine the next step in the process) and, critically, that a result is guaranteed after a finite number of steps. Hence, sensu stricto, under the formal, mathematical definition of ‘algorithm’, a process to calculate, say, the area of a rectangle is ‘algorithmic’ (because, assuming the programmer is competent, a result is guaranteed in a finite number of steps), whereas [somewhat counterintuitively] a computer’s operating system software is not (because, if the engineers have done a good job, the software should never terminate with the [infamous] Windows-blue-screen-of-death, but continue running indefinitely).

In his specification of what subsequently became known as the Turing Machine (TM) – a system we will explore in a later blog – Turing described an idealisation of a ‘human computer following an effective method by rote’, in a formal model rich enough to encompass ‘everything that be achieved by the human computer’, and with this was able to prove the existence of a large set of functions which are, fundamentally, not Turing Machine computable; limitations which, a fortiori, also apply to any modern PC. And for anyone interested in computational linguistics, this has significant implications, because if ‘understanding’ a block of text is not a computable function, there will be hard limits to the ‘degree of linguistic understanding’ that can ever be realised by machine.

As this blog series progresses I will be examining some of the methods that we are using at FACT360 to overcome these limitations so keep an eye on our updates to make sure you don’t miss a post – next time I will be looking at another key concept regarding AI when I discuss – ‘What is Thought?’

Read Part 3 here – “Thought as ‘Association’”

Professor Mark Bishop is FACT360’s Chief Scientific Adviser and to see how these leading-edge scientific techniques can be applied in your organisation download our White Paper “The Science of FACT360” or get in touch [email protected].